|

|

|

Oct '25 |

Excited to receive Amazon AI Fellowship! |

|

Aug '25 |

Two papers (Sky-T1 and S*) are accepted as EMNLP '25 Findings! |

|

May '25 |

Excited to announce the release of SkyRL, an RL framework for training Real-World Long-Horizon Agents! |

|

Feb '25 |

Invited talk at the SLICE Lab, UC Berkeley, hosted by Prof. Sophia Shao, on MoE-Lightning. |

|

Feb '25 |

Invited talk at the SAMPL group, University of Washington, hosted by Zihao Ye, on MoE-Lightning and Sky-T1. |

|

Feb '25 |

Sky-T1 is featured in The New York Times, The Wall Street Journal, and The Information! |

|

Oct '24 |

Two papers (MoE-Lightning and GraphPipe) are accepted at ASPLOS '25! |

|

Oct '23 |

Released S-LoRA, a scalable system for serving thousands of LoRA adapters concurrently! |

|

Aug '23 |

Graduated from ETH and joined UC Berkeley EECS! |

|

Shiyi Cao, Shu Liu, Tyler Griggs, Peter Schafhalter, Xiaoxuan Liu, Ying Sheng, Joseph E Gonzalez, Matei Zaharia, Ion Stoica ASPLOS 2025. Mixture of Experts; LLM Batch Inference; CPU Offloading. |

|

|

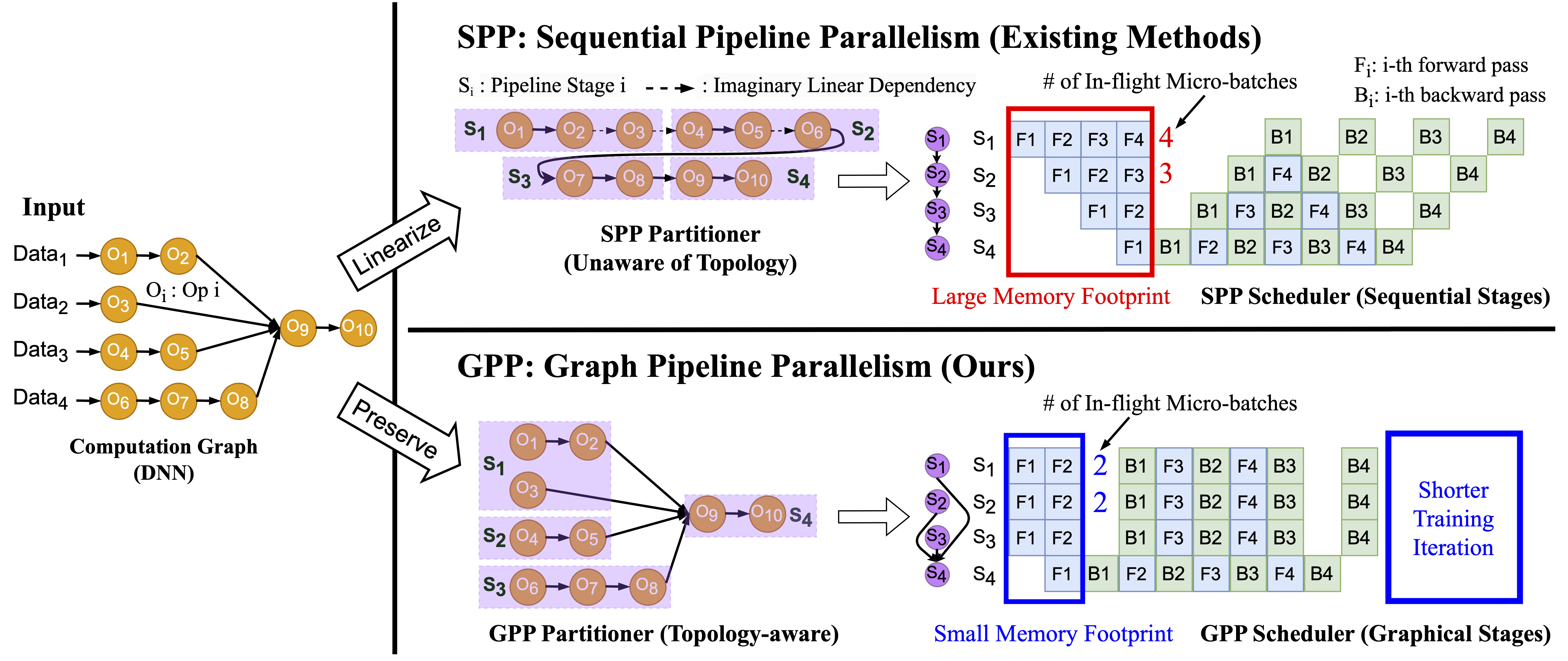

Byungsoo Jeon*, Mengdi Wu*, Shiyi Cao*, Sunghyun Kim*, Sunghyun Park, Neeraj Aggarwal, Colin Unger, Daiyaan Arfeen, Peiyuan Liao, Xupeng Miao, Mohammad Alizadeh, Gregory R. Ganger, Tianqi Chen, Zhihao Jia ASPLOS 2025. Distributed Training; Pipeline Parallelism. |

|

|

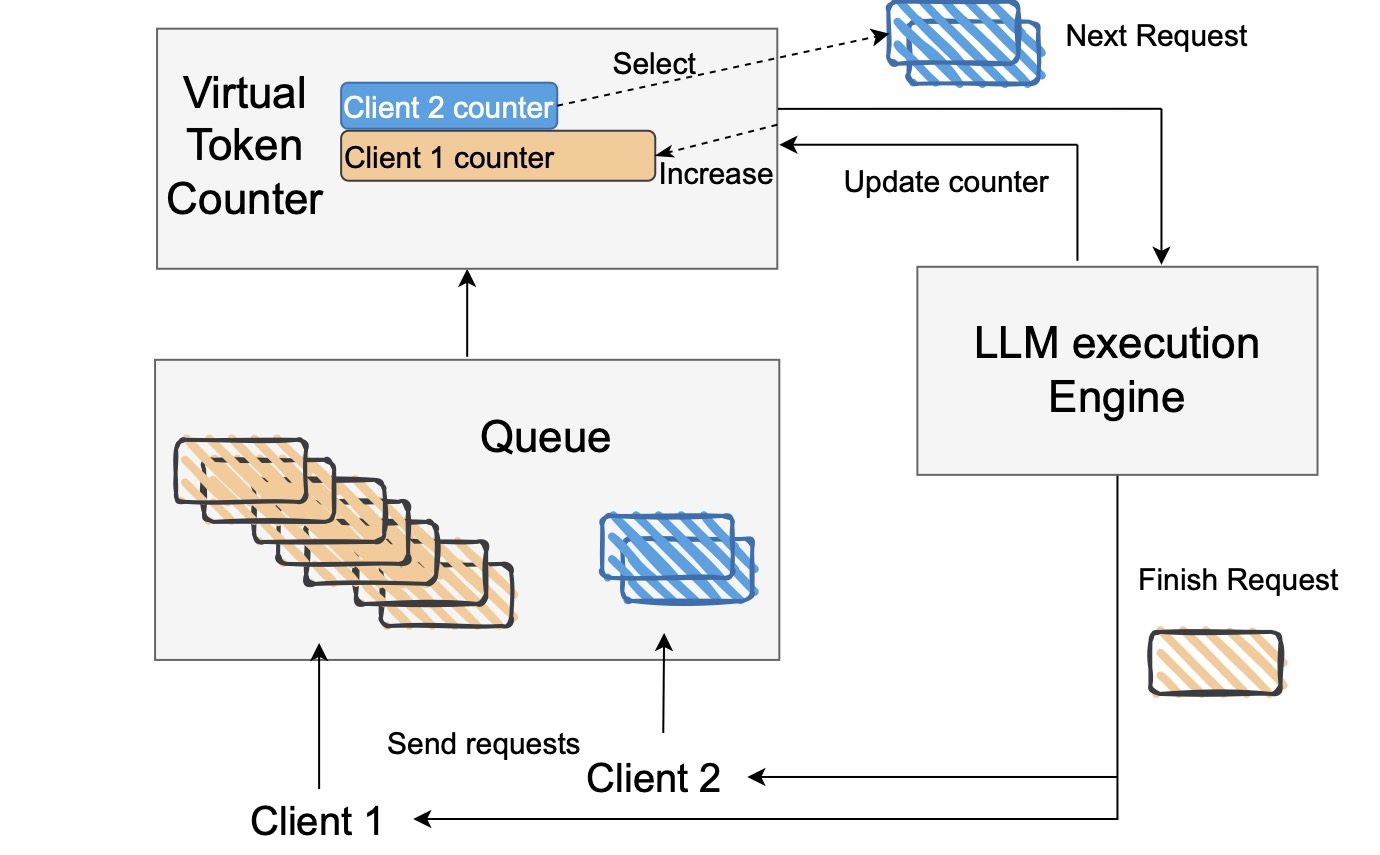

Ying Sheng, Shiyi Cao, Dacheng Li, Banghua Zhu, Zhuohan Li, Danyang Zhuo, Joseph E Gonzalez, Ion Stoica OSDI 2024. LLM Serving; Fair Scheduling. |

|

|

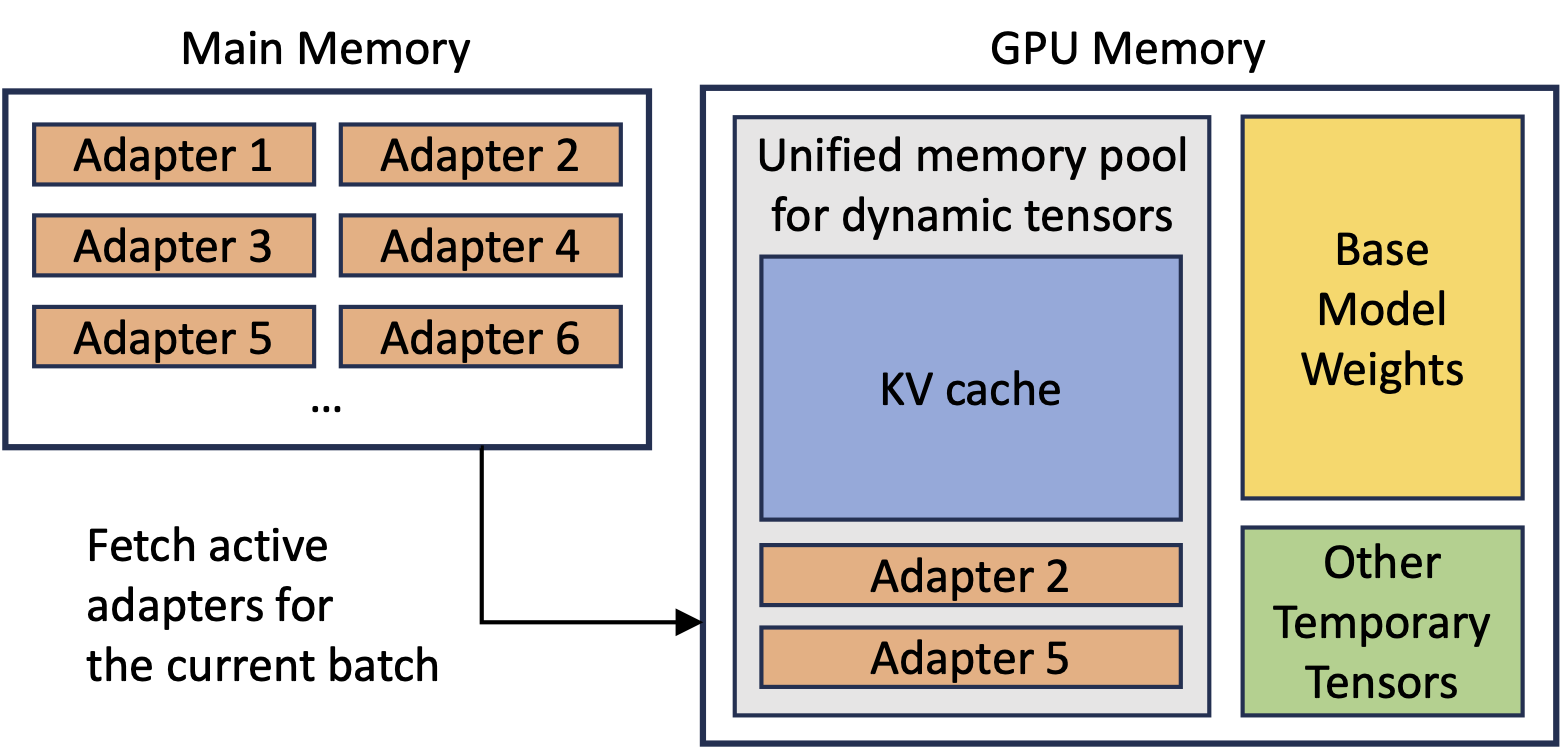

Ying Sheng*, Shiyi Cao*, Dacheng Li, Coleman Hooper, Nicholas Lee, Shuo Yang, Christopher Chou, Banghua Zhu, Lianmin Zheng, Kurt Keutzer, Joseph E. Gonzalez, Ion Stoica MLSys 2024. LLM Inference; LoRA; Adapters; Memory Management. |

|

|

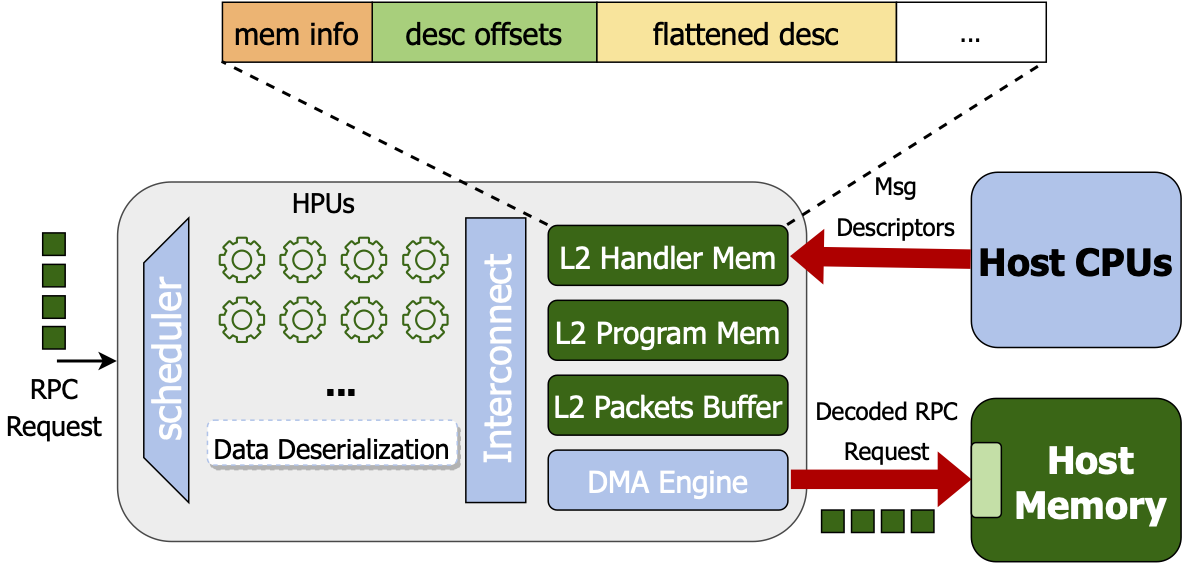

Shiyi Cao, Salvatore Di Girolamo, Torsten Hoefler Workshop on Exascale MPI, ExaMPI@SC, 2022. SmartNICs; In-Network Compute; Data (De)serialization. |

|

|

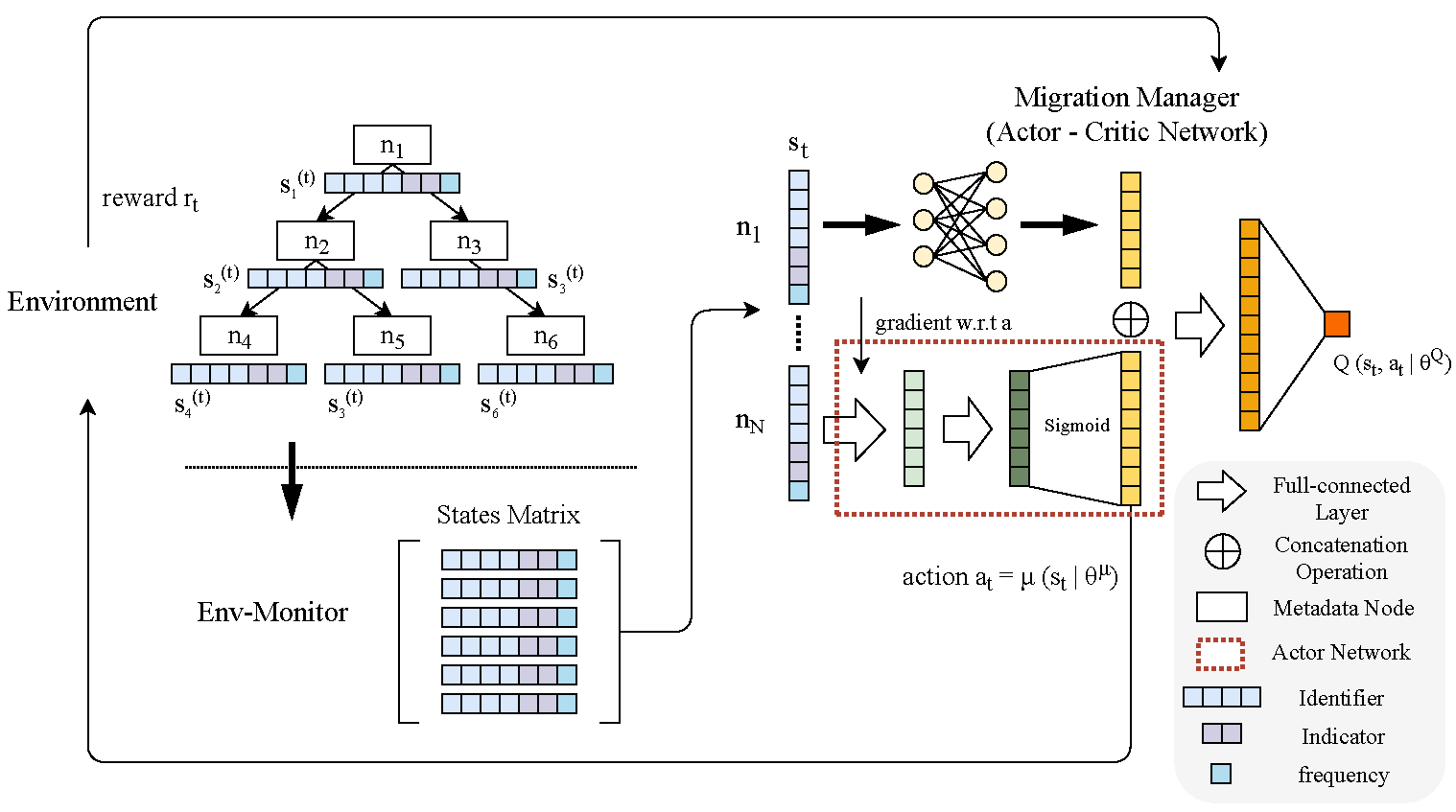

Shiyi Cao, Yuanning Gao, Xiaofeng Gao, Guihai Chen International Conference on Parallel Processing (ICPP), 2019. Distributed Systems; Metadata Management; Reinforcement Learning. |